We Want AI That Reads Our Mind (But Should We?)

I'm frustrated with today's AI assistants. They're glorified search engines that wait for me to tell them exactly what I want. I want an AI that anticipates my needs, organizes my scattered thoughts, and suggests what I should do next before I realize I need it.

I want an AI that understands what I mean, not what I say.

The Mind-Reading Fantasy

Picture this: Monday morning, your AI notices you've been in a document for 40 minutes without making changes. It knows you're stuck on the introduction and suggests outlining main points first. Friday afternoon, it reminds you that finishing the Henderson project now would clear your Monday morning, based on your work patterns.

This isn't science fiction. The technology exists. McKinsey projects that anticipatory AI could generate $1 trillion in global productivity gains by 2030. Companies are already building systems that learn user behavior and make proactive suggestions.

The Privacy Price Tag

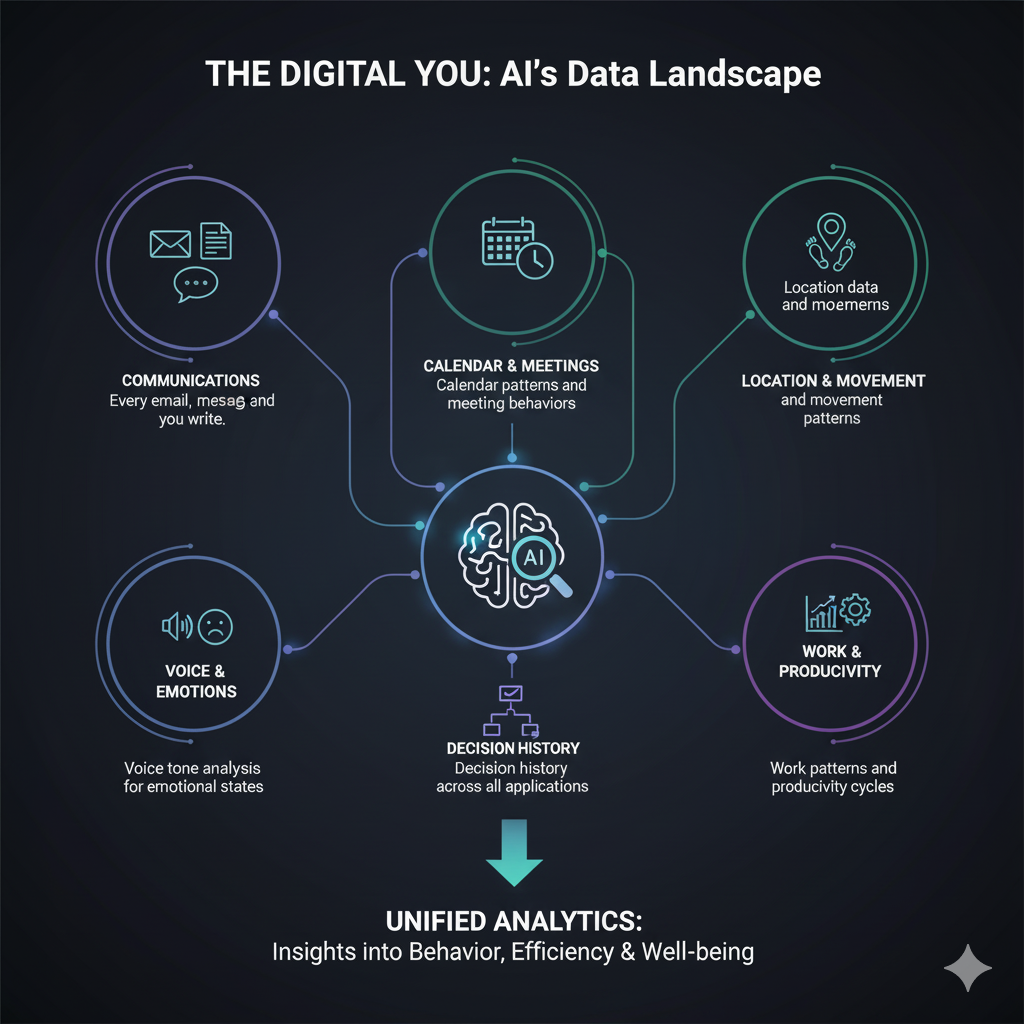

For AI to read your mind, it needs comprehensive access to your digital life:

- Every email, message, and document you write

- Calendar patterns and meeting behaviors

- Location data and movement patterns

- Voice tone analysis for emotional states

- Decision history across all applications

- Work patterns and productivity cycles

This isn't casual data collection. It's systematic life monitoring that makes current privacy concerns look trivial. Research shows that 40% of voice assistant users already worry about data usage, and that's with relatively simple systems.

The data problem gets worse over time. AI companies routinely use terabytes of personal information for training, and once your data becomes part of an AI model, complete deletion is nearly impossible. Your patterns, preferences, and personal information become permanently embedded in systems you can't control.

The Hidden Costs

Cognitive Dependency: When AI organizes your thoughts and predicts your needs, what happens to your own decision-making abilities? Research on GPS navigation shows that people lose spatial reasoning skills when they rely on turn-by-turn directions. The same risk applies to cognitive tasks.

Echo Chamber Effect: AI that perfectly predicts what you want based on past patterns might trap you in behavioral loops. Instead of helping you grow, it optimizes for historical preferences, potentially limiting discovery and change.

Accuracy Paradox: The better AI gets at prediction, the more frustrated you become with failures. Studies show that sophisticated AI systems create higher user expectations, making inevitable mistakes more annoying than helpful.

What's Actually Working

The best human assistants don't need comprehensive surveillance to be incredibly helpful. They develop intuition through observation, reading body language, noticing patterns in speech, and understanding context from brief interactions. They anticipate needs without monitoring every email or tracking every movement.

Interestingly, some of the most effective AI systems mirror this approach. A recent study found that a simple AI agent called "Alita" outperformed complex, tool-heavy systems by focusing on self-learning rather than predefined capabilities.

Research suggests that simpler models often generalize better and prove more robust in real-world applications, while complex models tend to overfit and learn noise rather than true patterns. The question becomes: can AI develop the same intuitive observation skills that make human assistants valuable, without requiring invasive data collection?

The Alternative Approach

Instead of comprehensive life monitoring, consider AI that develops intuition through:

Contextual Observation: Reading cues from current interactions rather than historical surveillance. Understanding tone, pacing, and word choice in real-time conversations.

Specialized Tools: AI that excels at specific tasks (coding, writing, scheduling) and becomes intuitive within those domains through focused observation.

Privacy-First Design: Systems that process data locally when possible and develop insights from interaction patterns rather than comprehensive data collection.

Human-Controlled Proactivity: AI that learns to suggest through brief interactions, like a good human assistant who picks up on subtle cues without needing your life story.

The Real Question

The technology to build mind-reading AI exists. You could have an AI that knows you better than you know yourself, anticipates your every need, and optimizes your daily decisions. But that assistant requires surrendering your privacy, potentially your cognitive autonomy, and possibly your capacity for surprise and growth. You will get lazy and not think for yourself.

I'd prefer a AI that helps me think better, rather than thinking for me. When asked I think most people would agree, but n practice I suspect most people will take the easy path with out even realizing they did. Our genetics wire us for taking mental shortcuts. It takes a lot of intention to want to think for yourself verses follow the crowd

Comments ()